Those of you who have previously read my blog know that I rarely use children’s games to address language goals. However, over the summer I have been working on improving executive function abilities (EFs) of some of the language impaired students on my caseload. As such, I found select children’s games to be highly beneficial for improving language-based executive function abilities.

Those of you who have previously read my blog know that I rarely use children’s games to address language goals. However, over the summer I have been working on improving executive function abilities (EFs) of some of the language impaired students on my caseload. As such, I found select children’s games to be highly beneficial for improving language-based executive function abilities.

For those of you who are only vaguely familiar with this concept, executive functions are higher level cognitive processes involved in the inhibition of thought, action, and emotion, which located in the prefrontal cortex of the frontal lobe of the brain. The development of executive functions begins in early infancy; but it can be easily disrupted by a number of adverse environmental and organic experiences (e.g., psychosocial deprivation, trauma). Furthermore, research in this area indicates that the children with language impairments present with executive function weaknesses which require remediation.

EF components include working memory, inhibitory control, planning, and set-shifting.

- Working memory

- Ability to store and manipulate information in mind over brief periods of time

- Inhibitory control

- Suppressing responses that are not relevant to the task

- Set-shifting

- Ability to shift behavior in response to changes in tasks or environment

Simply put, EFs contribute to the child’s ability to sustain attention, ignore distractions, and succeed in academic settings. By now some of you must be wondering: “So what does Hedbanz have to do with any of it?”

Well, Hedbanz is a quick-paced multiplayer (2-6 people) game of “What Am I?” for children ages 7 and up. Players get 3 chips and wear a “picture card” in their headband. They need to ask questions in rapid succession to figure out what they are. “Am I fruit?” “Am I a dessert?” “Am I sports equipment?” When they figure it out, they get rid of a chip. The first player to get rid of all three chips wins.

The game sounds deceptively simple. Yet if any SLPs or parents have ever played that game with their language impaired students/children as they would be quick to note how extraordinarily difficult it is for the children to figure out what their card is. Interestingly, in my clinical experience, I’ve noticed that it’s not just moderately language impaired children who present with difficulty playing this game. Even my bright, average intelligence teens, who have passed vocabulary and semantic flexibility testing (such as the WORD Test 2-Adolescent or the Vocabulary Awareness subtest of the Test of Integrated Language and Literacy ) significantly struggle with their language organization when playing this game.

So what makes Hedbanz so challenging for language impaired students? Primarily, it’s the involvement and coordination of the multiple executive functions during the game. In order to play Hedbanz effectively and effortlessly, the following EF involvement is needed:

- Task Initiation

- Students with executive function impairments will often “freeze up” and as a result may have difficulty initiating the asking of questions in the game because many will not know what kind of questions to ask, even after extensive explanations and elaborations by the therapist.

- Organization

- Students with executive function impairments will present with difficulty organizing their questions by meaningful categories and as a result will frequently lose their track of thought in the game.

- Working Memory

- This executive function requires the student to keep key information in mind as well as keep track of whatever questions they have already asked.

- Flexible Thinking

- This executive function requires the student to consider a situation from multiple angles in order to figure out the quickest and most effective way of arriving at a solution. During the game, students may present with difficulty flexibly generating enough organizational categories in order to be effective participants.

- Impulse Control

- Many students with difficulties in this area may blurt out an inappropriate category or in an appropriate question without thinking it through first.

- They may also present with difficulty set-shifting. To illustrate, one of my 13-year-old students with ASD, kept repeating the same question when it was his turn, despite the fact that he was informed by myself as well as other players of the answer previously.

- Emotional Control

- This executive function will help students with keeping their emotions in check when the game becomes too frustrating. Many students of difficulties in this area will begin reacting behaviorally when things don’t go their way and they are unable to figure out what their card is quickly enough. As a result, they may have difficulty mentally regrouping and reorganizing their questions when something goes wrong in the game.

- Self-Monitoring

- This executive function allows the students to figure out how well or how poorly they are doing in the game. Students with poor insight into own abilities may present with difficulty understanding that they are doing poorly and may require explicit instruction in order to change their question types.

- Planning and Prioritizing

- Students with poor abilities in this area will present with difficulty prioritizing their questions during the game.

Consequently, all of the above executive functions can be addressed via language-based goals. However, before I cover that, I’d like to review some of my session procedures first.

Typically, long before game initiation, I use the cards from the game to prep the students by teaching them how to categorize and classify presented information so they effectively and efficiently play the game.

Rather than using the “tip cards”, I explain to the students how to categorize information effectively.

Rather than using the “tip cards”, I explain to the students how to categorize information effectively.

This, in turn, becomes a great opportunity for teaching students relevant vocabulary words, which can be extended far beyond playing the game.

I begin the session by explaining to the students that pretty much everything can be roughly divided into two categories animate (living) or inanimate (nonliving) things. I explain that humans, animals, as well as plants belong to the category of living things, while everything else belongs to the category of inanimate objects. I further divide the category of inanimate things into naturally existing and man-made items. I explain to the students that the naturally existing category includes bodies of water, landmarks, as well as things in space (moon, stars, sky, sun, etc.). In contrast, things constructed in factories or made by people would be example of man-made objects (e.g., building, aircraft, etc.)

When I’m confident that the students understand my general explanations, we move on to discuss further refinement of these broad categories. If a student determines that their card belongs to the category of living things, we discuss how from there the student can further determine whether they are an animal, a plant, or a human. If a student determined that their card belongs to the animal category, we discuss how we can narrow down the options of figuring out what animal is depicted on their card by asking questions regarding their habitat (“Am I a jungle animal?”), and classification (“Am I a reptile?”). From there, discussion of attributes prominently comes into play. We discuss shapes, sizes, colors, accessories, etc., until the student is able to confidently figure out which animal is depicted on their card.

In contrast, if the student’s card belongs to the inanimate category of man-made objects, we further subcategorize the information by the object’s location (“Am I found outside or inside?”; “Am I found in ___ room of the house?”, etc.), utility (“Can I be used for ___?”), as well as attributes (e.g., size, shape, color, etc.)

Thus, in addition to improving the students’ semantic flexibility skills (production of definitions, synonyms, attributes, etc.) the game teaches the students to organize and compartmentalize information in order to effectively and efficiently arrive at a conclusion in the most time expedient fashion.

Now, we are ready to discuss what type of EF language-based goals, SLPs can target by simply playing this game.

1. Initiation: Student will initiate questioning during an activity in __ number of instances per 30-minute session given (maximal, moderate, minimal) type of ___ (phonemic, semantic, etc.) prompts and __ (visual, gestural, tactile, etc.) cues by the clinician.

2. Planning: Given a specific routine, student will verbally state the order of steps needed to complete it with __% accuracy given (maximal, moderate, minimal) type of ___ (phonemic, semantic, etc.) prompts and __ (visual, gestural, tactile, etc.) cues by the clinician.

3. Working Memory: Student will repeat clinician provided verbal instructions pertaining to the presented activity, prior to its initiation, with 80% accuracy given (maximal, moderate, minimal) type of ___ (phonemic, semantic, etc.) prompts and __ (visual, gestural, tactile, etc.) cues by the clinician.

4. Flexible Thinking: Following a training by the clinician, student will generate at least __ questions needed for task completion (e.g., winning the game) with __% accuracy given (maximal, moderate, minimal) type of ___ (phonemic, semantic, etc.) prompts and __ (visual, gestural, tactile, etc.) cues by the clinician.

5. Organization: Student will use predetermined written/visual cues during an activity to assist self with organization of information (e.g., questions to ask) with __% accuracy given (maximal, moderate, minimal) type of ___ (phonemic, semantic, etc.) prompts and __ (visual, gestural, tactile, etc.) cues by the clinician.

6. Impulse Control: During the presented activity the student will curb blurting out inappropriate responses (by silently counting to 3 prior to providing his response) in __ number of instances per 30 minute session given (maximal, moderate, minimal) type of ___ (phonemic, semantic, etc.) prompts and __ (visual, gestural, tactile, etc.) cues by the clinician.

7. Emotional Control: When upset, student will verbalize his/her frustration (vs. behavioral activing out) in __ number of instances per 30 minute session given (maximal, moderate, minimal) type of ___ (phonemic, semantic, etc.) prompts and __ (visual, gestural, tactile, etc.) cues by the clinician.

8. Self-Monitoring: Following the completion of an activity (e.g., game) student will provide insight into own strengths and weaknesses during the activity (recap) by verbally naming the instances in which s/he did well, and instances in which s/he struggled with __% accuracy given (maximal, moderate, minimal) type of ___ (phonemic, semantic, etc.) prompts and __ (visual, gestural, tactile, etc.) cues by the clinician.

There you have it. This one simple game doesn’t just target a plethora of typical expressive language goals. It can effectively target and improve language-based executive function goals as well. Considering the fact that it sells for approximately $12 on Amazon.com, that’s a pretty useful therapy material to have in one’s clinical tool repertoire. For fancier versions, clinicians can use “Jeepers Peepers” photo card sets sold by Super Duper Inc. Strapped for cash, due to highly limited budget? You can find plenty of free materials online if you simply input “Hedbanz cards” in your search query on Google. So have a little fun in therapy, while your students learn something valuable in the process and play Hedbanz today!

Related Smart Speech Therapy Resources:

In the past several years, I wrote a series of posts on the topic of improving clinical practices in speech-language pathology. Some of these posts were based on my clinical experience as backed by research, while others summarized key point from articles written by prominent colleagues in our field such as Dr. Alan Kamhi, Dr. David DeBonnis, Dr. Andrew Vermiglio, etc.

In the past several years, I wrote a series of posts on the topic of improving clinical practices in speech-language pathology. Some of these posts were based on my clinical experience as backed by research, while others summarized key point from articles written by prominent colleagues in our field such as Dr. Alan Kamhi, Dr. David DeBonnis, Dr. Andrew Vermiglio, etc.

.JPG)

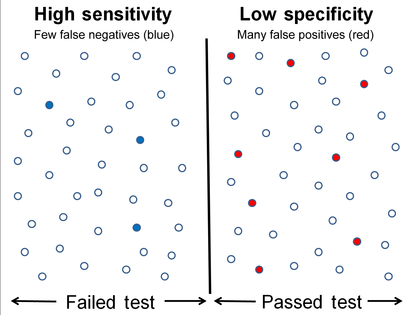

Here is the problem though: I only see the above follow-up steps in a small percentage of cases. In the vast majority of cases in which score discrepancies occur, I see the examiners ignoring the weaknesses without follow up. This of course results in the child not qualifying for services.

Here is the problem though: I only see the above follow-up steps in a small percentage of cases. In the vast majority of cases in which score discrepancies occur, I see the examiners ignoring the weaknesses without follow up. This of course results in the child not qualifying for services. So the next time you see a pattern of strengths and weaknesses and testing, even if it amounts to a total average score, I urge you to dig deeper. I urge you to investigate why this pattern is displayed in the first place. The same goes for you – parents! If you are looking at average total scores but seeing unexplained weaknesses in select testing areas, start asking questions! Ask the professional to explain why those deficits are occuring and tell them to dig deeper if you are not satisfied with what you are hearing. All students deserve access to FAPE (Free and Appropriate Public Education). This includes access to appropriate therapies, they may need in order to optimally function in the classroom.

So the next time you see a pattern of strengths and weaknesses and testing, even if it amounts to a total average score, I urge you to dig deeper. I urge you to investigate why this pattern is displayed in the first place. The same goes for you – parents! If you are looking at average total scores but seeing unexplained weaknesses in select testing areas, start asking questions! Ask the professional to explain why those deficits are occuring and tell them to dig deeper if you are not satisfied with what you are hearing. All students deserve access to FAPE (Free and Appropriate Public Education). This includes access to appropriate therapies, they may need in order to optimally function in the classroom.

Here’s a familiar scenario to many SLPs. You’ve administered several standardized language tests to your student (e.g., CELF-5 & TILLS). You expected to see roughly similar scores across tests. Much to your surprise, you find that while your student attained somewhat average scores on one assessment, s/he had completely bombed the second assessment, and you have no idea why that happened.

Here’s a familiar scenario to many SLPs. You’ve administered several standardized language tests to your student (e.g., CELF-5 & TILLS). You expected to see roughly similar scores across tests. Much to your surprise, you find that while your student attained somewhat average scores on one assessment, s/he had completely bombed the second assessment, and you have no idea why that happened. Actually, my last statement is rather debatable. A careful perusal of the CELF – 5 reveals that its normative sample of 3000 children included a whopping 23% of children with language-related disabilities. In fact, the folks from the

Actually, my last statement is rather debatable. A careful perusal of the CELF – 5 reveals that its normative sample of 3000 children included a whopping 23% of children with language-related disabilities. In fact, the folks from the

The TILLS is a far less known assessment than the CELF-5 yet in the few years it has been out on the market it really made its presence felt by being a solid assessment tool due to its valid and reliable psychometric properties. Again, the venerable Dr. Carol Westby had already done such an excellent job reviewing its

The TILLS is a far less known assessment than the CELF-5 yet in the few years it has been out on the market it really made its presence felt by being a solid assessment tool due to its valid and reliable psychometric properties. Again, the venerable Dr. Carol Westby had already done such an excellent job reviewing its  Then there’s a variation of this assertion, which I have seen in several Facebook groups: “

Then there’s a variation of this assertion, which I have seen in several Facebook groups: “