As a speech-language pathologist (SLP) working in a psychiatric setting, I routinely address social pragmatic language goals as part of my clinical practice. Finding the right approach to the treatment of social pragmatic language disorders has been challenging to say the least. That is because the efficacy of social communication interventions continues to be quite limited. Studies to date continue to show questionable results and limited carryover, while measurements of improvement are frequently subjective, biased, and subject to a placebo effect, maturation effect, and regression to the mean. However, despite the significant challenges to clinical practice in this area, the usage of videos for treatment purposes shows an emergent promise. Continue reading On the Value of Social Pragmatic Interventions via Video Analysis

As a speech-language pathologist (SLP) working in a psychiatric setting, I routinely address social pragmatic language goals as part of my clinical practice. Finding the right approach to the treatment of social pragmatic language disorders has been challenging to say the least. That is because the efficacy of social communication interventions continues to be quite limited. Studies to date continue to show questionable results and limited carryover, while measurements of improvement are frequently subjective, biased, and subject to a placebo effect, maturation effect, and regression to the mean. However, despite the significant challenges to clinical practice in this area, the usage of videos for treatment purposes shows an emergent promise. Continue reading On the Value of Social Pragmatic Interventions via Video Analysis

Category: Middle School

Test Review: Clinical Assessment of Pragmatics (CAPs)

Today due to popular demand I am reviewing the Clinical Assessment of Pragmatics (CAPs) for children and young adults ages 7 – 18, developed by the Lavi Institute and sold by WPS Publishing. Readers of this blog are familiar with the fact that I specialize in working with children diagnosed with psychiatric impairments and behavioral and emotional difficulties. They are also aware that I am constantly on the lookout for good quality social communication assessments due to a notorious dearth of good quality instruments in this area of language. Continue reading Test Review: Clinical Assessment of Pragmatics (CAPs)

Today due to popular demand I am reviewing the Clinical Assessment of Pragmatics (CAPs) for children and young adults ages 7 – 18, developed by the Lavi Institute and sold by WPS Publishing. Readers of this blog are familiar with the fact that I specialize in working with children diagnosed with psychiatric impairments and behavioral and emotional difficulties. They are also aware that I am constantly on the lookout for good quality social communication assessments due to a notorious dearth of good quality instruments in this area of language. Continue reading Test Review: Clinical Assessment of Pragmatics (CAPs)

Why “good grades” do not automatically rule out “adverse educational impact”

As a speech-language pathologist (SLP) working with school-age children, I frequently assess students whose language and literacy abilities adversely impact their academic functioning. For the parents of school-aged children with suspected language and literacy deficits as well as for the SLPs tasked with screening and evaluating them, the concept of ‘academic impact’ comes up on daily basis. In fact, not a day goes by when I do not see a variation of the following question: “Is there evidence of academic impact?”, being discussed in a variety of Facebook groups dedicated to speech pathology issues. Continue reading Why “good grades” do not automatically rule out “adverse educational impact”

As a speech-language pathologist (SLP) working with school-age children, I frequently assess students whose language and literacy abilities adversely impact their academic functioning. For the parents of school-aged children with suspected language and literacy deficits as well as for the SLPs tasked with screening and evaluating them, the concept of ‘academic impact’ comes up on daily basis. In fact, not a day goes by when I do not see a variation of the following question: “Is there evidence of academic impact?”, being discussed in a variety of Facebook groups dedicated to speech pathology issues. Continue reading Why “good grades” do not automatically rule out “adverse educational impact”

Editable Report Template and Tutorial for the Test of Integrated Language and Literacy

Today I am introducing my newest report template for the Test of Integrated Language and Literacy.

This 16-page fully editable report template discusses the testing results and includes the following components: Continue reading Editable Report Template and Tutorial for the Test of Integrated Language and Literacy

On the Limitations of Using Vocabulary Tests with School-Aged Students

Those of you who read my blog on a semi-regular basis, know that I spend a considerable amount of time in both of my work settings (an outpatient school located in a psychiatric hospital as well as private practice), conducting language and literacy evaluations of preschool and school-aged children 3-18 years of age. During that process, I spend a significant amount of time reviewing outside speech and language evaluations. Interestingly, what I have been seeing is that no matter what the child’s age is (7 or 17), invariably some form of receptive and/or expressive vocabulary testing is always mentioned in their language report. Continue reading On the Limitations of Using Vocabulary Tests with School-Aged Students

What are They Trying To Say? Interpreting Music Lyrics for Figurative Language Acquisition Purposes

In my last post, I described how I use obscurely worded newspaper headlines to improve my students’ interpretation of ambiguous and figurative language. Today, I wanted to further delve into this topic by describing the utility of interpreting music lyrics for language therapy purposes. I really like using music lyrics for language treatment purposes. Not only do my students and I get to listen to really cool music, but we also get an opportunity to define a variety of literary devices (e.g., hyperboles, similes, metaphors, etc.) as well as identify them and interpret their meaning in music lyrics. Continue reading What are They Trying To Say? Interpreting Music Lyrics for Figurative Language Acquisition Purposes

In my last post, I described how I use obscurely worded newspaper headlines to improve my students’ interpretation of ambiguous and figurative language. Today, I wanted to further delve into this topic by describing the utility of interpreting music lyrics for language therapy purposes. I really like using music lyrics for language treatment purposes. Not only do my students and I get to listen to really cool music, but we also get an opportunity to define a variety of literary devices (e.g., hyperboles, similes, metaphors, etc.) as well as identify them and interpret their meaning in music lyrics. Continue reading What are They Trying To Say? Interpreting Music Lyrics for Figurative Language Acquisition Purposes

Have I Got This Right? Developing Self-Questioning to Improve Metacognitive and Metalinguistic Skills

Many of my students with Developmental Language Disorders (DLD) lack insight and have poorly developed metalinguistic (the ability to think about and discuss language) and metacognitive (think about and reflect upon own thinking) skills. This, of course, creates a significant challenge for them in both social and academic settings. Not only do they have a poorly developed inner dialogue for critical thinking purposes but they also because they present with significant self-monitoring and self-correcting challenges during speaking and reading tasks. Continue reading Have I Got This Right? Developing Self-Questioning to Improve Metacognitive and Metalinguistic Skills

Many of my students with Developmental Language Disorders (DLD) lack insight and have poorly developed metalinguistic (the ability to think about and discuss language) and metacognitive (think about and reflect upon own thinking) skills. This, of course, creates a significant challenge for them in both social and academic settings. Not only do they have a poorly developed inner dialogue for critical thinking purposes but they also because they present with significant self-monitoring and self-correcting challenges during speaking and reading tasks. Continue reading Have I Got This Right? Developing Self-Questioning to Improve Metacognitive and Metalinguistic Skills

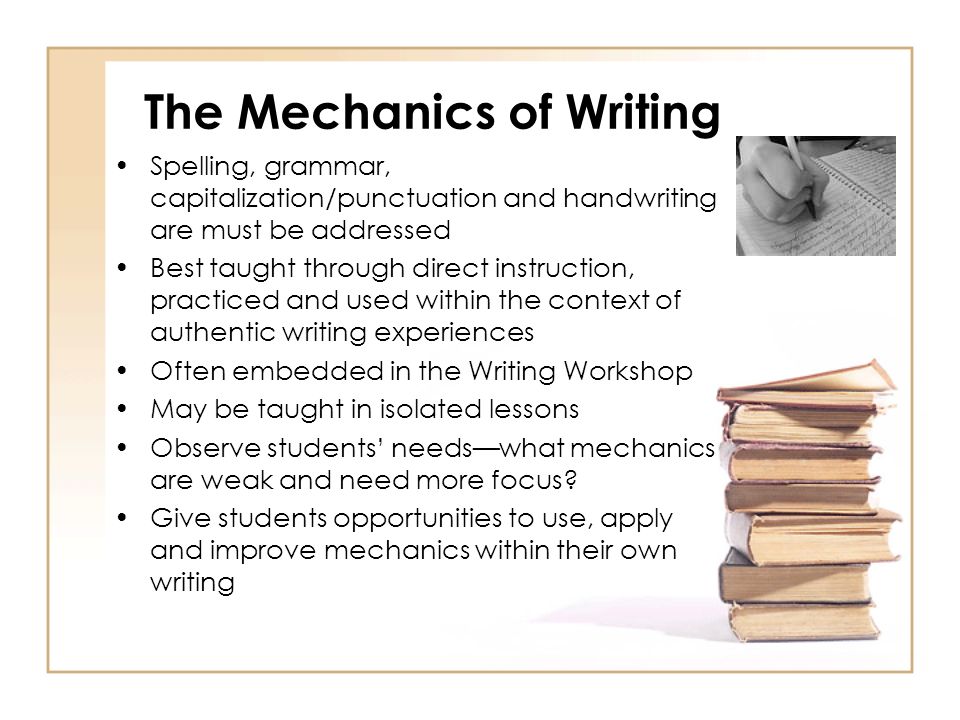

Components of Qualitative Writing Assessments: What Exactly are We Trying to Measure?

Writing! The one assessment area that challenges many SLPs on daily basis! If one polls 10 SLPs on the topic of writing, one will get 10 completely different responses ranging from agreement and rejection to the diverse opinions regarding what should actually be assessed and how exactly it should be accomplished.

Consequently, today I wanted to focus on the basics involved in the assessment of adolescent writing. Why adolescents you may ask? Well, frankly because many SLPs (myself included) are far more likely to assess the writing abilities of adolescents rather than elementary-aged children.

Often, when the students are younger and their literacy abilities are weaker, the SLPs may not get to the assessment of writing abilities due to the students presenting with so many other deficits which require precedence intervention-wise. However, as the students get older and the academic requirements increase exponentially, SLPs may be more frequently asked to assess the students’ writing abilities because difficulties in this area significantly affect them in a variety of classes on a variety of subjects.

So what can we assess when it comes to writing? In the words of Helen Lester’s character ‘Pookins’: “Lots!” There are various types of writing that can be assessed, the most common of which include: expository, persuasive, and fictional. Each of these can be used for assessment purposes in a variety of ways.

To illustrate, if we chose to analyze the student’s written production of fictional narratives then we may broadly choose to analyze the following aspects of the student’s writing: contextual conventions and writing composition.

The former looks at such writing aspects as the use of correct spelling, punctuation, and capitalization, paragraph formation, etc.

The former looks at such writing aspects as the use of correct spelling, punctuation, and capitalization, paragraph formation, etc.

The latter looks at the nitty-gritty elements involved in plot development. These include effective use of literate vocabulary, plotline twists, character development, use of dialogue, etc.

Perhaps we want to analyze the student’s persuasive writing abilities. After all, high school students are expected to utilize this type of writing frequently for essay writing purposes. Actually, persuasive writing is a complex genre which is particularly difficult for students with language-learning difficulties who struggle to produce essays that are clear, logical, convincing, appropriately sequenced, and take into consideration opposing points of view. It is exactly for that reason that persuasive writing tasks are perfect for assessment purposes.

But what exactly are we looking for analysis wise? What should a typical 15 year old’s persuasive essays contain?

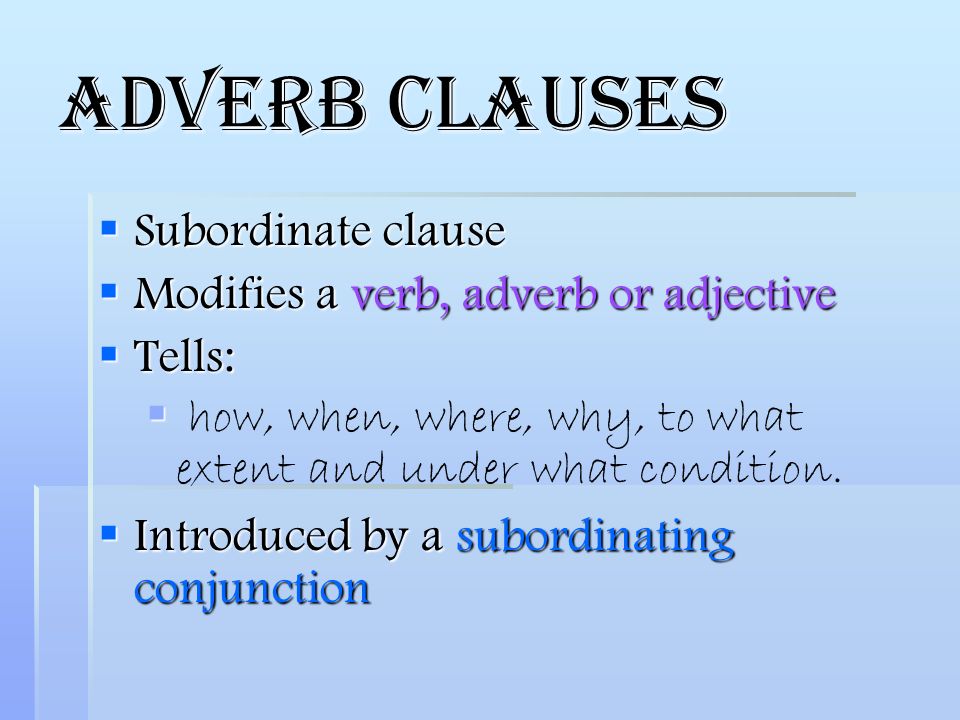

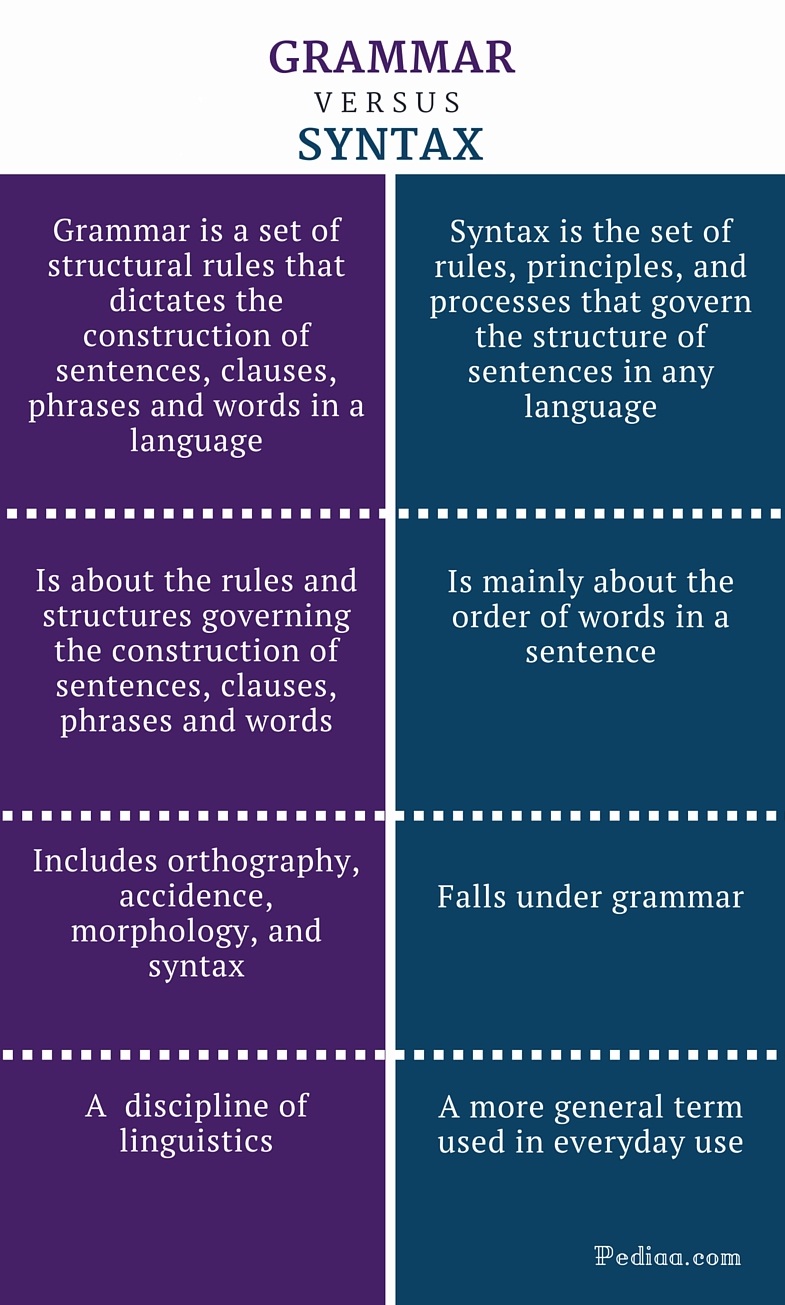

With respect to syntax, a typical student that age is expected to write complex sentences possessing nominal, adverbial, as well as relative clauses.

With respect to syntax, a typical student that age is expected to write complex sentences possessing nominal, adverbial, as well as relative clauses.

With the respect to semantics, effective persuasive essays require the use of literate vocabulary words of low frequency such as later developing connectors (e.g., first of all, next, for this reason, on the other hand, consequently, finally, in conclusion) as well as metalinguistic and metacognitive verbs (“metaverbs”) that refer to acts of speaking (e.g., assert, concede, predict, argue, imply) and thinking (e.g., hypothesize, remember, doubt, assume, infer).

With respect to pragmatics, as students mature, their sensitivity to the perspectives of others improves, as a result, their persuasive essays increase in length (i.e., total number of words produced) and they are able to offer a greater number of different reasons to support their own opinions (Nippold, Ward-Lonergan, & Fanning, 2005).

Now let’s apply our knowledge by analyzing a writing sample of a 15-year-old with suspected literacy deficits. Below 10th-grade student was provided with a written prompt first described in the Nippold, et al, 2005 study, entitled: “The Circus Controversy”. “People have different views on animals performing in circuses. For example, some people think it is a great idea because it provides lots of entertainment for the public. Also, it gives parents and children something to do together, and the people who train the animals can make some money. However, other people think having animals in circuses is a bad idea because the animals are often locked in small cages and are not fed well. They also believe it is cruel to force a dog, tiger, or elephant to perform certain tricks that might be dangerous. I am interested in learning what you think about this controversy, and whether or not you think circuses with trained animals should be allowed to perform for the public. I would like you to spend the next 20 minutes writing an essay. Tell me exactly what you think about the controversy. Give me lots of good reasons for your opinion. Please use your best writing style, with correct grammar and spelling. If you aren’t sure how to spell a word, just take a guess.”(Nippold, Ward-Lonergan, & Fanning, 2005)

He produced the following written sample during the allotted 20 minutes.

Analysis: This student was able to generate a short, 3-paragraph, composition containing an introduction and a body without a definitive conclusion. His persuasive essay was judged to be very immature for his grade level due to significant disorganization, limited ability to support his point of view as well as the presence of tangential information in the introduction of his composition, which was significantly compromised by many writing mechanics errors (punctuation, capitalization, as well as spelling) that further impacted the coherence and cohesiveness of his written output.

The student’s introduction began with an inventive dialogue, which was irrelevant to the body of his persuasive essay. He did have three important points relevant to the body of the essay: animal cruelty, danger to the animals, and potential for the animals to harm humans. However, he was unable to adequately develop those points into full paragraphs. The notable absence of proofreading and editing of the composition further contributed to its lack of clarity. The above coupled with a lack of a conclusion was not commensurate grade-level expectations.

Based on the above-written sample, the student’s persuasive composition content (thought formulation and elaboration) was judged to be significantly immature for his grade level and is commensurate with the abilities of a much younger student. The student’s composition contained several emerging claims that suggested a vague position. However, though the student attempted to back up his opinion and support his position (animals should not be performing in circuses), ultimately he was unable to do so in a coherent and cohesive manner.

Based on the above-written sample, the student’s persuasive composition content (thought formulation and elaboration) was judged to be significantly immature for his grade level and is commensurate with the abilities of a much younger student. The student’s composition contained several emerging claims that suggested a vague position. However, though the student attempted to back up his opinion and support his position (animals should not be performing in circuses), ultimately he was unable to do so in a coherent and cohesive manner.

Now that we know what the student’s written difficulties look like, the following goals will be applicable with respect to his writing remediation:

Long-Term Goals: Student will improve his written abilities for academic purposes.

- Short-Term Goals

- Student will appropriately utilize parts of speech (e.g., adjectives, adverbs, prepositions, etc.) in compound and complex sentences.

- Student will use a variety of sentence types for story composition purposes (e.g., declarative, interrogative, imperative, and exclamatory sentences).

- Student will correctly use past, present, and future verb tenses during writing tasks.

- Student will utilize appropriate punctuation at the sentence level (e.g., apostrophes, periods, commas, colons, quotation marks in dialogue, and apostrophes in singular possessives, etc.).

- Student will utilize appropriate capitalization at the sentence level (e.g., capitalize proper nouns, holidays, product names, titles with names, initials, geographic locations, historical periods, special events, etc.).

- Student will use prewriting techniques to generate writing ideas (e.g., list keywords, state key ideas, etc.).

- Student will determine the purpose of his writing and his intended audience in order to establish the tone of his writing as well as outline the main idea of his writing.

- Student will generate a draft in which information is organized in chronological order via use of temporal markers (e.g., “meanwhile,” “immediately”) as well as cohesive ties (e.g., ‘but’, ‘yet’, ‘so’, ‘nor’) and cause/effect transitions (e.g., “therefore,” “as a result”).

- Student will improve coherence and logical organization of his written output via the use of revision strategies (e.g., modify supporting details, use sentence variety, employ literary devices).

- Student will edit his draft for appropriate grammar, spelling, punctuation, and capitalization.

There you have it. A quick and easy qualitative writing assessment which can assist SLPs to determine the extent of the student’s writing difficulties as well as establish writing remediation targets for intervention purposes.

Using a different type of writing assessment with your students? Please share the details below so we can all benefit from each others knowledge of assessment strategies.

References:

- Nippold, M., Ward-Lonergan, J., & Fanning, J. (2005). Persuasive writing in children, adolescents, and adults: a study of syntactic, semantic, and pragmatic development. Language, Speech, and Hearing Services in Schools, 36, 125-138.

Making Our Interventions Count or What’s Research Got To Do With It?

Two years ago I wrote a blog post entitled: “What’s Memes Got To Do With It?” which summarized key points of Dr. Alan G. Kamhi’s 2004 article: “A Meme’s Eye View of Speech-Language Pathology“. It delved into answering the following question: “Why do some terms, labels, ideas, and constructs [in our field] prevail whereas others fail to gain acceptance?”.

Two years ago I wrote a blog post entitled: “What’s Memes Got To Do With It?” which summarized key points of Dr. Alan G. Kamhi’s 2004 article: “A Meme’s Eye View of Speech-Language Pathology“. It delved into answering the following question: “Why do some terms, labels, ideas, and constructs [in our field] prevail whereas others fail to gain acceptance?”.

Today I would like to reference another article by Dr. Kamhi written in 2014, entitled “Improving Clinical Practices for Children With Language and Learning Disorders“.

This article was written to address the gaps between research and clinical practice with respect to the implementation of EBP for intervention purposes.

Dr. Kamhi begins the article by posing 10 True or False questions for his readers:

- Learning is easier than generalization.

- Instruction that is constant and predictable is more effective than instruction that varies the conditions of learning and practice.

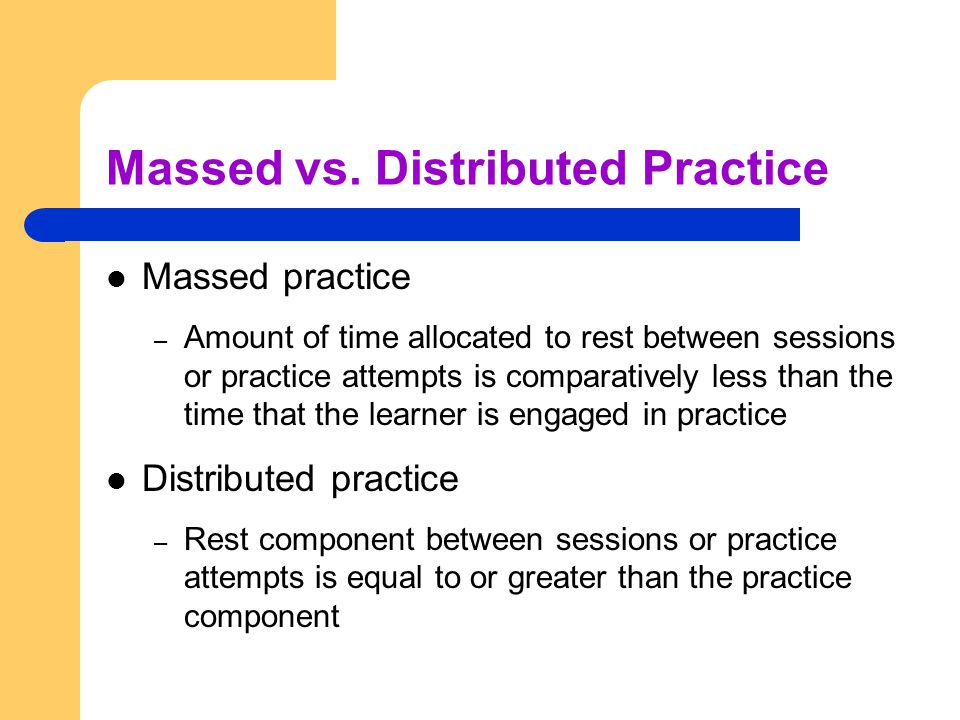

- Focused stimulation (massed practice) is a more effective teaching strategy than varied stimulation (distributed practice).

- The more feedback, the better.

- Repeated reading of passages is the best way to learn text information.

- More therapy is always better.

- The most effective language and literacy interventions target processing limitations rather than knowledge deficits.

- Telegraphic utterances (e.g., push ball, mommy sock) should not be provided as input for children with limited language.

- Appropriate language goals include increasing levels of mean length of utterance (MLU) and targeting Brown’s (1973) 14 grammatical morphemes.

- Sequencing is an important skill for narrative competence.

Guess what? Only statement 8 of the above quiz is True! Every other statement from the above is FALSE!

Now, let’s talk about why that is!

First up is the concept of learning vs. generalization. Here Dr. Kamhi discusses that some clinicians still possess an “outdated behavioral view of learning” in our field, which is not theoretically and clinically useful. He explains that when we are talking about generalization – what children truly have a difficulty with is “transferring narrow limited rules to new situations“. “Children with language and learning problems will have difficulty acquiring broad-based rules and modifying these rules once acquired, and they also will be more vulnerable to performance demands on speech production and comprehension (Kamhi, 1988)” (93). After all, it is not “reasonable to expect children to use language targets consistently after a brief period of intervention” and while we hope that “language intervention [is] designed to lead children with language disorders to acquire broad-based language rules” it is a hugely difficult task to undertake and execute.

First up is the concept of learning vs. generalization. Here Dr. Kamhi discusses that some clinicians still possess an “outdated behavioral view of learning” in our field, which is not theoretically and clinically useful. He explains that when we are talking about generalization – what children truly have a difficulty with is “transferring narrow limited rules to new situations“. “Children with language and learning problems will have difficulty acquiring broad-based rules and modifying these rules once acquired, and they also will be more vulnerable to performance demands on speech production and comprehension (Kamhi, 1988)” (93). After all, it is not “reasonable to expect children to use language targets consistently after a brief period of intervention” and while we hope that “language intervention [is] designed to lead children with language disorders to acquire broad-based language rules” it is a hugely difficult task to undertake and execute.

Next, Dr. Kamhi addresses the issue of instructional factors, specifically the importance of “varying conditions of instruction and practice“. Here, he addresses the fact that while contextualized instruction is highly beneficial to learners unless we inject variability and modify various aspects of instruction including context, composition, duration, etc., we ran the risk of limiting our students’ long-term outcomes.

After that, Dr. Kamhi addresses the concept of distributed practice (spacing of intervention) and how important it is for teaching children with language disorders. He points out that a number of recent studies have found that “spacing and distribution of teaching episodes have more of an impact on treatment outcomes than treatment intensity” (94).

After that, Dr. Kamhi addresses the concept of distributed practice (spacing of intervention) and how important it is for teaching children with language disorders. He points out that a number of recent studies have found that “spacing and distribution of teaching episodes have more of an impact on treatment outcomes than treatment intensity” (94).

He also advocates reducing evaluative feedback to learners to “enhance long-term retention and generalization of motor skills“. While he cites research from studies pertaining to speech production, he adds that language learning could also benefit from this practice as it would reduce conversational disruptions and tunning out on the part of the student.

From there he addresses the limitations of repetition for specific tasks (e.g., text rereading). He emphasizes how important it is for students to recall and retrieve text rather than repeatedly reread it (even without correction), as the latter results in a lack of comprehension/retention of read information.

After that, he discusses treatment intensity. Here he emphasizes the fact that higher dose of instruction will not necessarily result in better therapy outcomes due to the research on the effects of “learning plateaus and threshold effects in language and literacy” (95). We have seen research on this with respect to joint book reading, vocabulary words exposure, etc. As such, at a certain point in time increased intensity may actually result in decreased treatment benefits.

His next point against processing interventions is very near and dear to my heart. Those of you familiar with my blog know that I have devoted a substantial number of posts pertaining to the lack of validity of CAPD diagnosis (as a standalone entity) and urged clinicians to provide language based vs. specific auditory interventions which lack treatment utility. Here, Dr. Kamhi makes a great point that: “Interventions that target processing skills are particularly appealing because they offer the promise of improving language and learning deficits without having to directly target the specific knowledge and skills required to be a proficient speaker, listener, reader, and writer.” (95) The problem is that we have numerous studies on the topic of improvement of isolated skills (e.g., auditory skills, working memory, slow processing, etc.) which clearly indicate lack of effectiveness of these interventions. As such, “practitioners should be highly skeptical of interventions that promise quick fixes for language and learning disabilities” (96).

His next point against processing interventions is very near and dear to my heart. Those of you familiar with my blog know that I have devoted a substantial number of posts pertaining to the lack of validity of CAPD diagnosis (as a standalone entity) and urged clinicians to provide language based vs. specific auditory interventions which lack treatment utility. Here, Dr. Kamhi makes a great point that: “Interventions that target processing skills are particularly appealing because they offer the promise of improving language and learning deficits without having to directly target the specific knowledge and skills required to be a proficient speaker, listener, reader, and writer.” (95) The problem is that we have numerous studies on the topic of improvement of isolated skills (e.g., auditory skills, working memory, slow processing, etc.) which clearly indicate lack of effectiveness of these interventions. As such, “practitioners should be highly skeptical of interventions that promise quick fixes for language and learning disabilities” (96).

Now let us move on to language and particularly the models we provide to our clients to encourage greater verbal output. Research indicates that when clinicians are attempting to expand children’s utterances, they need to provide well-formed language models. Studies show that children select strong input when its surrounded by weaker input (the surrounding weaker syllables make stronger syllables stand out). As such, clinicians should expand upon/comment on what clients are saying with grammatically complete models vs. telegraphic productions.

From there lets us take a look at Dr. Kamhi’s recommendations for grammar and syntax. Grammatical development goes much further than addressing Brown’s morphemes in therapy and calling it a day. As such, it is important to understand that children with developmental language disorders (DLD) (#DevLang) do not have difficulty acquiring all morphemes. Rather studies have shown that they have difficulty learning grammatical morphemes that reflect tense and agreement (e.g., third-person singular, past tense, auxiliaries, copulas, etc.). As such, use of measures developed by Hadley & Holt, 2006; Hadley & Short, 2005 (e.g., Tense Marker Total & Productivity Score) can yield helpful information regarding which grammatical structures to target in therapy.

From there lets us take a look at Dr. Kamhi’s recommendations for grammar and syntax. Grammatical development goes much further than addressing Brown’s morphemes in therapy and calling it a day. As such, it is important to understand that children with developmental language disorders (DLD) (#DevLang) do not have difficulty acquiring all morphemes. Rather studies have shown that they have difficulty learning grammatical morphemes that reflect tense and agreement (e.g., third-person singular, past tense, auxiliaries, copulas, etc.). As such, use of measures developed by Hadley & Holt, 2006; Hadley & Short, 2005 (e.g., Tense Marker Total & Productivity Score) can yield helpful information regarding which grammatical structures to target in therapy.

With respect to syntax, Dr. Kamhi notes that many clinicians erroneously believe that complex syntax should be targeted when children are much older. The Common Core State Standards do not help this cause further, since according to the CCSS complex syntax should be targeted 2-3 grades, which is far too late. Typically developing children begin developing complex syntax around 2 years of age and begin readily producing it around 3 years of age. As such, clinicians should begin targeting complex syntax in preschool years and not wait until the children have mastered all morphemes and clauses (97)

Finally, Dr. Kamhi wraps up his article by offering suggestions regarding prioritizing intervention goals. Here, he explains that goal prioritization is affected by

- clinician experience and competencies

- the degree of collaboration with other professionals

- type of service delivery model

- client/student factors

He provides a hypothetical case scenario in which the teaching responsibilities are divvied up between three professionals, with SLP in charge of targeting narrative discourse. Here, he explains that targeting narratives does not involve targeting sequencing abilities. “The ability to understand and recall events in a story or script depends on conceptual understanding of the topic and attentional/memory abilities, not sequencing ability.” He emphasizes that sequencing is not a distinct cognitive process that requires isolated treatment. Yet many SLPs “continue to believe that sequencing is a distinct processing skill that needs to be assessed and treated.” (99)

Dr. Kamhi supports the above point by providing an example of two passages. One, which describes a random order of events, and another which follows a logical order of events. He then points out that the randomly ordered story relies exclusively on attention and memory in terms of “sequencing”, while the second story reduces demands on memory due to its logical flow of events. As such, he points out that retelling deficits seemingly related to sequencing, tend to be actually due to “limitations in attention, working memory, and/or conceptual knowledge“. Hence, instead of targeting sequencing abilities in therapy, SLPs should instead use contextualized language intervention to target aspects of narrative development (macro and microstructural elements).

Dr. Kamhi supports the above point by providing an example of two passages. One, which describes a random order of events, and another which follows a logical order of events. He then points out that the randomly ordered story relies exclusively on attention and memory in terms of “sequencing”, while the second story reduces demands on memory due to its logical flow of events. As such, he points out that retelling deficits seemingly related to sequencing, tend to be actually due to “limitations in attention, working memory, and/or conceptual knowledge“. Hence, instead of targeting sequencing abilities in therapy, SLPs should instead use contextualized language intervention to target aspects of narrative development (macro and microstructural elements).

Furthermore, here it is also important to note that the “sequencing fallacy” affects more than just narratives. It is very prevalent in the intervention process in the form of the ubiquitous “following directions” goal/s. Many clinicians readily create this goal for their clients due to their belief that it will result in functional therapeutic language gains. However, when one really begins to deconstruct this goal, one will realize that it involves a number of discrete abilities including: memory, attention, concept knowledge, inferencing, etc. Consequently, targeting the above goal will not result in any functional gains for the students (their memory abilities will not magically improve as a result of it). Instead, targeting specific language and conceptual goals (e.g., answering questions, producing complex sentences, etc.) and increasing the students’ overall listening comprehension and verbal expression will result in improvements in the areas of attention, memory, and processing, including their ability to follow complex directions.

There you have it! Ten practical suggestions from Dr. Kamhi ready for immediate implementation! And for more information, I highly recommend reading the other articles in the same clinical forum, all of which possess highly practical and relevant ideas for therapeutic implementation. They include:

There you have it! Ten practical suggestions from Dr. Kamhi ready for immediate implementation! And for more information, I highly recommend reading the other articles in the same clinical forum, all of which possess highly practical and relevant ideas for therapeutic implementation. They include:

- Clinical Scientists Improving Clinical Practices: In Thoughts and Actions

- Approaching Early Grammatical Intervention From a Sentence-Focused Framework

- What Works in Therapy: Further Thoughts on Improving Clinical Practice for Children With Language Disorders

- Improving Clinical Practice: A School-Age and School-Based Perspective

- Improving Clinical Services: Be Aware of Fuzzy Connections Between Principles and Strategies

- One Size Does Not Fit All: Improving Clinical Practice in Older Children and Adolescents With Language and Learning Disorders

- Language Intervention at the Middle School: Complex Talk Reflects Complex Thought

- Using Our Knowledge of Typical Language Development

References:

Kamhi, A. (2014). Improving clinical practices for children with language and learning disorders. Language, Speech, and Hearing Services in Schools, 45(2), 92-103

Helpful Social Media Resources:

New Products for the 2017 Academic School Year for SLPs

September is quickly approaching and school-based speech language pathologists (SLPs) are preparing to go back to work. Many of them are looking to update their arsenal of speech and language materials for the upcoming academic school year.

With that in mind, I wanted to update my readers regarding all the new products I have recently created with a focus on assessment and treatment in speech language pathology. Continue reading New Products for the 2017 Academic School Year for SLPs