Frequently, I see a variation of the following scenario on many speech and language forums.

Frequently, I see a variation of the following scenario on many speech and language forums.

The SLP is seeing a client with speech and/or language deficits through early intervention, in the schools, or in private practice, who is having some kind of behavioral issues.

Some issues are described as mild such as calling out, hyperactivity, impulsivity, or inattention, while others are more severe and include refusal, noncompliance, or aggression such as kicking, biting, or punching.

An array of advice from well-meaning professionals immediately follows. Some behaviors may be labeled as “normal” due to the child’s age (toddler), others may be “partially excused” due to a DSM-5 diagnosis (e.g., ASD). Recommendations for reinforcement charts (not grounded in evidence) may be suggested. A call for other professionals to deal with the behaviors is frequently made (“in my setting the ______ (insert relevant professional here) deals with these behaviors and I don’t have to be involved”). Specific judgments on the child may be pronounced: “There is nothing wrong with him/her, they’re just acting out to get what they want.” Some drastic recommendations could be made: “Maybe you should stop therapy until the child’s behaviors are stabilized”.

However, several crucial factors often get overlooked. First, a system to figure out why particular set of behaviors takes place and second, whether these behaviors may be manifestations of non-behaviorally based difficulties such as medical issues, or overt/subtle linguistically based deficits.

So what are some reasons kids may present with behavioral deficits? Obviously, there could be numerous reasons: some benign while others serious, ranging from lack of structure and understanding of expectations to manifestations of psychiatric illnesses and genetic syndromes. Oftentimes the underlying issues are incredibly difficult to recognize without a differential diagnosis. In other words, we cannot claim that the child’s difficulties are “just behavior” if we have not appropriately ruled out other causes which may be contributing to the “behavior”.

Here are some possible steps which can ensure appropriate identification of the source of the child’s behavioral difficulties in cases of hidden underlying language disorders (after of course relevant learning, genetic, medical, and psychiatric issues have been ruled out).

Let’s begin by answering a few simple questions. Was a thorough language evaluation with an emphasis on the child’s social pragmatic language abilities been completed? And by thorough, I am not referring to general language tests but to a variety of formal and informal social pragmatic language testing (read more HERE).

Let’s begin by answering a few simple questions. Was a thorough language evaluation with an emphasis on the child’s social pragmatic language abilities been completed? And by thorough, I am not referring to general language tests but to a variety of formal and informal social pragmatic language testing (read more HERE).

Please note that none of the general language tests such as the Preschool Language Scale-5 (PLS-5), Comprehensive Assessment of Spoken Language (CASL-2), the Test of Language Development-4 (TOLD-4) or even the Clinical Evaluation of Language Fundamentals Tests (CELF-P2)/ (CELF-5) tap into the child’s social language competence because they do NOT directly test the child’s social language skills (e.g., CELF-5 assesses them via a parental/teachers questionnaire). Thus, many children can attain average scores on these tests yet still present with pervasive social language deficits. That is why it’s very important to thoroughly assess social pragmatic language abilities of all children (no matter what their age is) presenting with behavioral deficits.

Please note that none of the general language tests such as the Preschool Language Scale-5 (PLS-5), Comprehensive Assessment of Spoken Language (CASL-2), the Test of Language Development-4 (TOLD-4) or even the Clinical Evaluation of Language Fundamentals Tests (CELF-P2)/ (CELF-5) tap into the child’s social language competence because they do NOT directly test the child’s social language skills (e.g., CELF-5 assesses them via a parental/teachers questionnaire). Thus, many children can attain average scores on these tests yet still present with pervasive social language deficits. That is why it’s very important to thoroughly assess social pragmatic language abilities of all children (no matter what their age is) presenting with behavioral deficits.

But let’s say that the social pragmatic language abilities have been assessed and the child was found/not found to be eligible for services, meanwhile, their behavioral deficits persist, what do we do now?

The first step in establishing a behavior management system is determining the function of challenging behaviors, since we need to understand why the behavior is occurring and what is triggering it (Chandler & Dahlquist, 2006)

We can begin by performing some basic data collection with a child of any age (even with toddlers) to determine behavior functions or reasons for specific behaviors. Here are just a few limited examples:

- Seeking Attention/Reward

- Seeking Sensory Stimulation

- Seeking Control

Most behavior functions typically tend to be positively, negatively or automatically reinforced (Bobrow, 2002). For example, in cases of positive reinforcement, the child may exhibit challenging behaviors to obtain desirable items such as toys, games, attention, etc. If the parent/teacher inadvertently supplies the child with the desired item, they are reinforcing inappropriate behaviors positively and in a way strengthening the child’s desire to repeat the experience over and over again, since it had positively worked for them before.

In contrast, negative reinforcement takes place when the child exhibits challenging behaviors to escape a negative situation and gets his way. For example, the child is being disruptive in classroom/therapy because the tasks are too challenging and is ‘rewarded’ when therapy is discontinued early or when the classroom teacher asks an aide to take the child for a walk.

Finally, automatic reinforcements occur when certain behaviors such as repetitive movements or self-injury produce an enjoyable sensation for the child, which he then repeats again to recreate the sensation.

In order to determine what reinforces the child’s challenging behaviors, we must perform repeated observations and take data on the following:

- Antecedent or what triggered the child’s behavior?

- What was happening immediately before behavior occurred?

- Behavior

- What type of challenging behavior/s took place as a result?

- Response/Consequence

- How did you respond to behavior when it took place?

Here are just a few antecedent examples:

- Therapist requested that child work on task

- Child bored w/t task

- Favorite task/activity taken away

- Child could not obtain desired object/activity

In order to figure them out we need to collect data, prior to appropriately addressing them. After the data is collected the goals need to be prioritized based urgency/seriousness. We can also use modification techniques aimed at managing interfering behaviors. These techniques include modifications of: physical space, session structure, session materials as well as child’s behavior. As we are implementing these modifications we need to keep in mind the child’s maintaining factors or factors which contribute to the maintenance of the problem (Klein & Moses, 1999). These include: cognitive, sensorimotor, psychosocial and linguistic deficits.

We also need to choose our reward system wisely, since the most effective systems which facilitate positive change actually utilize intrinsic rewards (pride in self for own accomplishments) (Kohn, 2001). We need to teach the child positive replacement behaviors to replace the use of negative ones, with an emphasis on self-talk, critical thinking, as well as talking about the problem vs. acting out behaviorally.

Of course it is very important that we utilize a team based approach and involve all the professionals involved in the child’s care including the child’s parents in order to ensure smooth and consistent carryover across all settings. Consistency is definitely a huge part of all behavior plans as it optimizes intervention results and achieves the desired therapy outcomes.

So the next time the client on your caseload is acting out don’t be so hasty in judging their behavior, when you have no idea regarding the reasons for it. Troubleshoot using appropriate and relevant steps in order to figure out what is REALLY going on and then attempt to change the situation in a team-based, systematic way.

For more detailed information on the topic of social pragmatic language assessment and behavior management in speech pathology see if the following Smart Speech Therapy LLC products could be of use:

References:

- Bobrow, A. (2002). Problem behaviors in the classroom: What they mean and how to help. Functional Behavioral Assessment, 7 (2), 1–6.

- Chandler, L.K., & Dahlquist, C.M. Functional assessment: strategies to prevent and remediate challenging behavior in school settings (2nd ed.). Upper Saddle River, New Jersey: Pearson Education, Inc.

- Klein, H., & Moses, N. (1999). Intervention planning for children with communication disorders: A guide to the clinical practicum and professional practice. (2nd Ed.). Boston, MA.: Allyn & Bacon.

- Kohn, A. (2001, Sept). Five reasons to stop saying “good job!’. Young Children. Retrieved from http://www.alfiekohn.org/parenting/gj.htm

Several days ago I had a conversation with the Associate Director of Continuing Education at ASHA regarding my significant concerns about the content and quality of some of ASHA approved continuing education courses. For many months before that, numerous discussions took place in a variety of major SLP related Facebook groups, pertaining to the non-EBP content of some of ASHA approved provider coursework, many of which was blatantly pseudoscientific in nature.

Several days ago I had a conversation with the Associate Director of Continuing Education at ASHA regarding my significant concerns about the content and quality of some of ASHA approved continuing education courses. For many months before that, numerous discussions took place in a variety of major SLP related Facebook groups, pertaining to the non-EBP content of some of ASHA approved provider coursework, many of which was blatantly pseudoscientific in nature. In July 2015 I wrote a blog post entitled: “Why (C) APD Diagnosis is NOT Valid!”

In July 2015 I wrote a blog post entitled: “Why (C) APD Diagnosis is NOT Valid!” This month I am joining the ranks of bloggers who are blogging about research related to the field of speech pathology. Click

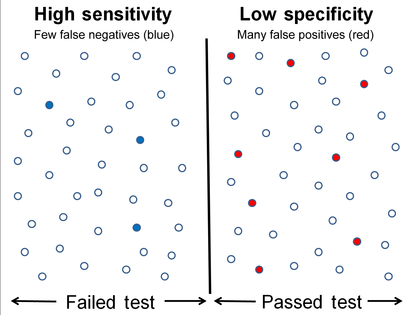

This month I am joining the ranks of bloggers who are blogging about research related to the field of speech pathology. Click  Here’s a familiar scenario to many SLPs. You’ve administered several standardized language tests to your student (e.g., CELF-5 & TILLS). You expected to see roughly similar scores across tests. Much to your surprise, you find that while your student attained somewhat average scores on one assessment, s/he had completely bombed the second assessment, and you have no idea why that happened.

Here’s a familiar scenario to many SLPs. You’ve administered several standardized language tests to your student (e.g., CELF-5 & TILLS). You expected to see roughly similar scores across tests. Much to your surprise, you find that while your student attained somewhat average scores on one assessment, s/he had completely bombed the second assessment, and you have no idea why that happened. Actually, my last statement is rather debatable. A careful perusal of the CELF – 5 reveals that its normative sample of 3000 children included a whopping 23% of children with language-related disabilities. In fact, the folks from the

Actually, my last statement is rather debatable. A careful perusal of the CELF – 5 reveals that its normative sample of 3000 children included a whopping 23% of children with language-related disabilities. In fact, the folks from the

The TILLS is a far less known assessment than the CELF-5 yet in the few years it has been out on the market it really made its presence felt by being a solid assessment tool due to its valid and reliable psychometric properties. Again, the venerable Dr. Carol Westby had already done such an excellent job reviewing its

The TILLS is a far less known assessment than the CELF-5 yet in the few years it has been out on the market it really made its presence felt by being a solid assessment tool due to its valid and reliable psychometric properties. Again, the venerable Dr. Carol Westby had already done such an excellent job reviewing its  Then there’s a variation of this assertion, which I have seen in several Facebook groups: “

Then there’s a variation of this assertion, which I have seen in several Facebook groups: “ Frequently, I see a variation of the following scenario on many speech and language forums.

Frequently, I see a variation of the following scenario on many speech and language forums. Let’s begin by answering a few simple questions. Was a thorough language evaluation with an emphasis on the child’s social pragmatic language abilities been completed? And by thorough, I am not referring to general language tests but to a variety of formal and informal social pragmatic language testing (read more

Let’s begin by answering a few simple questions. Was a thorough language evaluation with an emphasis on the child’s social pragmatic language abilities been completed? And by thorough, I am not referring to general language tests but to a variety of formal and informal social pragmatic language testing (read more  Please note that none of the general language tests such as the Preschool Language Scale-5 (PLS-5), Comprehensive Assessment of Spoken Language (CASL-2), the Test of Language Development-4 (TOLD-4) or even the Clinical Evaluation of Language Fundamentals Tests (CELF-P2)/ (CELF-5) tap into the child’s social language competence because they do NOT directly test the child’s social language skills (e.g., CELF-5 assesses them via a parental/teachers questionnaire). Thus, many children can attain average scores on these tests yet still present with pervasive social language deficits. That is why it’s very important to thoroughly assess social pragmatic language abilities of all children (no matter what their age is) presenting with behavioral deficits.

Please note that none of the general language tests such as the Preschool Language Scale-5 (PLS-5), Comprehensive Assessment of Spoken Language (CASL-2), the Test of Language Development-4 (TOLD-4) or even the Clinical Evaluation of Language Fundamentals Tests (CELF-P2)/ (CELF-5) tap into the child’s social language competence because they do NOT directly test the child’s social language skills (e.g., CELF-5 assesses them via a parental/teachers questionnaire). Thus, many children can attain average scores on these tests yet still present with pervasive social language deficits. That is why it’s very important to thoroughly assess social pragmatic language abilities of all children (no matter what their age is) presenting with behavioral deficits.

Today I am excited to review one of the latest products from Busy Bee Speech “Common Core Standards-Based RtI Packet for Language“.

Today I am excited to review one of the latest products from Busy Bee Speech “Common Core Standards-Based RtI Packet for Language“. Today I am doing a product swap and giveaway with Rose Kesting of

Today I am doing a product swap and giveaway with Rose Kesting of

The Test of Silent Word Reading Fluency (TOSWRF-2)

The Test of Silent Word Reading Fluency (TOSWRF-2)